USCH is a non-clinical construct focused on user-side context dynamics, trust calibration, and safety boundaries.

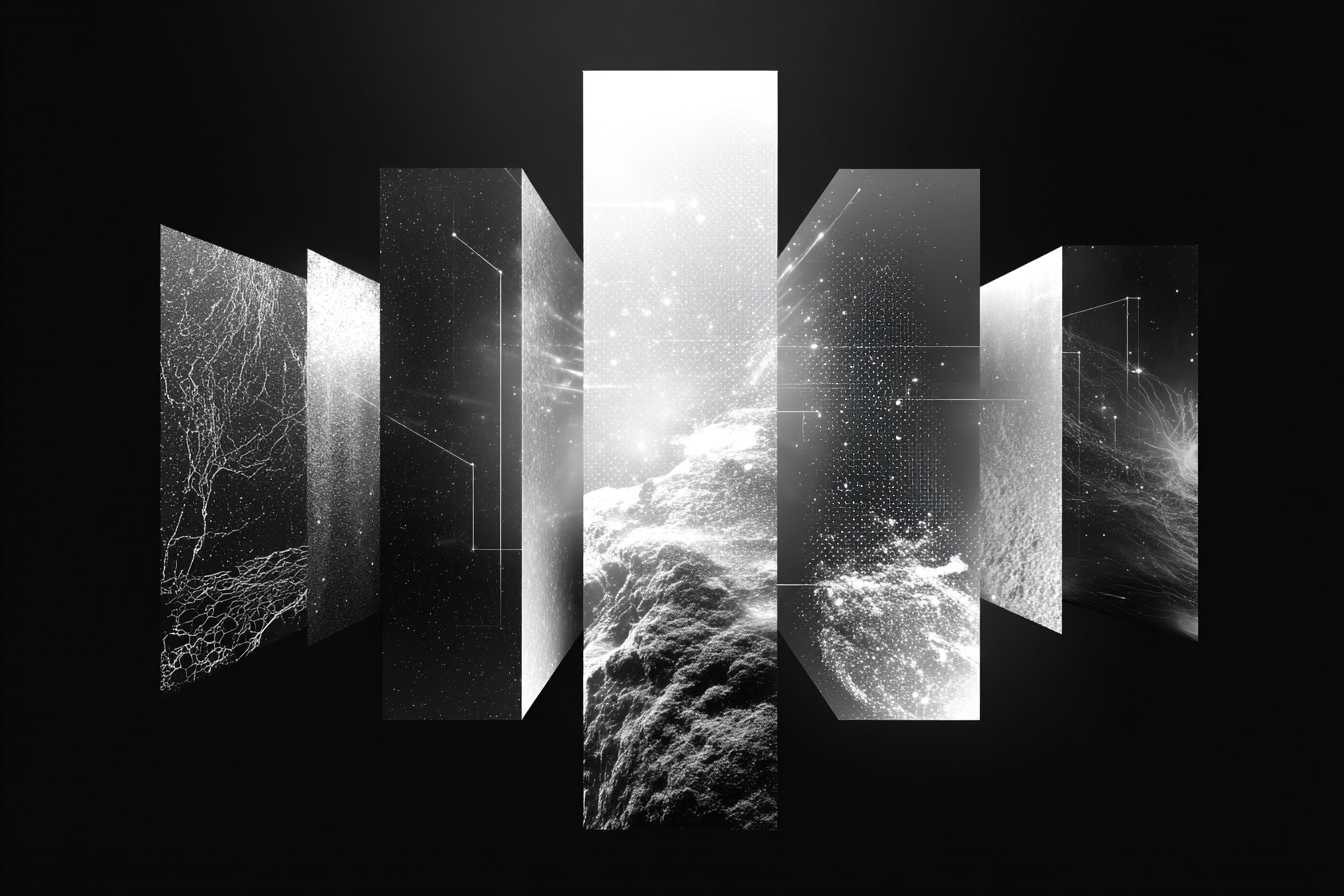

Built on three pillars

Contextual complexity

CXC-7 maps seven dimensions of user context. Temporal layering, semantic density, role ambiguity, assumption accumulation, boundary erosion, coherence strain, and agency distribution shape how misalignment emerges.

Contextual outcome dynamics

CXOD-7 tracks outcome dynamics driving user expectations. Confirmation bias, pattern overgeneralization, exception normalization, reliability inflation, scope creep, constraint forgetting, and risk discounting compound over time.

Coherence and grounding measure

Coh(G) measures coherence between user mental models and actual system grounding. This measure reveals the gap where hallucination takes root and persists.

Six stages of USCH emergence

User context moves through distinct phases, each building on prior conditions and constraints.

Three cuts define USCH

Each criterion marks a distinct boundary in user-side risk formation.

Where context divergence begins

User perception departs from AI's actual operational constraints.

How risk anchors itself

Misalignment becomes embedded in user's mental model of interaction.

What user can still influence

Agency remains despite contextual hallucination, enabling mitigation strategies.

User-side contextual hallucination emerges when a person interacting with an AI system develops a mental model of the AI's capabilities, constraints, and behavior that diverges from its actual operational parameters. This divergence is not a failure of the AI itself, but rather a natural consequence of how humans construct understanding through conversation. The user builds expectations based on early interactions, contextual cues, and implicit signals. Over time, these expectations can crystallize into beliefs that no longer align with what the system can actually do.

The USCH framework identifies this process as distinct from clinical hallucination because it originates on the user's side of the interaction. The AI may be functioning precisely as designed, yet the user's interpretation of its boundaries, reliability, and scope becomes distorted. This matters because user-side misalignment creates real risks. A person who believes an AI system has capabilities it lacks may make decisions based on false confidence. Conversely, a user who underestimates an AI's reliability may dismiss valid outputs or fail to engage with genuinely useful assistance.

Context dynamics play a central role in how hallucination forms and persists. Early in an interaction, context is sparse and users fill gaps with assumptions. As conversations deepen, context accumulates, but so does the opportunity for misalignment to embed itself. A user might notice inconsistencies in the AI's responses and interpret them as evidence of hidden capabilities rather than as signs of genuine limitations. Trust calibration becomes difficult because the user lacks direct access to the AI's internal state or training data.

The three foundational frameworks—CXC-7, CXOD-7, and Coh(G)—provide the analytical structure for mapping this terrain. CXC-7 identifies seven dimensions along which user context can become complex: temporal layering, semantic density, role ambiguity, assumption accumulation, boundary erosion, coherence strain, and agency distribution. CXOD-7 tracks how outcomes drive user expectations: confirmation bias, pattern overgeneralization, exception normalization, reliability inflation, scope creep, constraint forgetting, and risk discounting. Coh(G) measures the coherence of the user's mental model against the actual grounding of the system's capabilities.

Formation of USCH follows a recognizable trajectory. Initial exposure brings awareness of conversational boundaries, though these are often vague. Trust calibration begins as the user tests the system's reliability within stated parameters. Context complexity deepens through multi-turn interaction and semantic layering, creating more surface area for misalignment. Misalignment signals emerge—moments where the AI behaves unexpectedly—but users often reinterpret these signals rather than revise their models. Defensive postures or acceptance patterns develop based on observed behavior. Finally, stabilization occurs as the user integrates risk awareness into their ongoing interaction model, though this awareness may itself be misaligned.

Three criteria distinguish USCH from other forms of user error or miscommunication. The origin cut marks where user perception departs from the AI's actual operational constraints. This is not a matter of the user being uninformed; it is a matter of the user's mental model having diverged from reality in a specific way. The grounding cut identifies how this misalignment becomes embedded in the user's mental model, anchoring itself through repeated interaction and reinforcement. The control cut recognizes that despite contextual hallucination, the user retains agency. They can still influence the interaction, adjust their approach, and implement mitigation strategies if they recognize the misalignment.

Defense against USCH operates on two levels. The 1024 protocol focuses on early context calibration before misalignment takes root. By establishing clear, explicit boundaries and testing user understanding of those boundaries in the first few exchanges, the protocol reduces the likelihood that divergent mental models will form. The protocol is named for its emphasis on early intervention—the first thousand tokens of interaction carry disproportionate weight in shaping user expectations. VCD, or Vært Context Defense, operates through four constructs: role reframing to clarify what the AI can and cannot do, contextual cognition to help users track the actual scope of the system's knowledge, contextual misalignment detection to identify when user expectations have drifted, and context decomposition to break complex interactions into clearer components. When misalignment is detected, three operational responses are available: archiving to preserve the misaligned context for later review, termination to reset the interaction and begin again with clearer boundaries, and redirection to guide the user toward more accurate mental models.

The distinction between USCH and related phenomena is important. Prompt injection attacks are adversarial attempts to manipulate the AI's behavior. USCH is not adversarial; it emerges naturally from the structure of human-AI conversation. Jailbreaking involves deliberate circumvention of safety measures. USCH involves genuine misunderstanding of what safety measures exist. Hallucination in the classical sense refers to the AI generating false information. USCH refers to the user's false beliefs about the AI's capabilities, which may or may not involve the AI generating false information at all.

The practical implications of USCH extend across multiple domains. In customer service, users may develop inflated expectations of what an AI agent can resolve, leading to frustration when the system cannot deliver. In research and analysis, users may over-rely on AI outputs without understanding the limitations of the training data or the model's tendency toward certain biases. In creative collaboration, users may attribute intentionality or understanding to the AI that it does not possess, leading to miscommunication about what the system can contribute. In high-stakes decision-making, USCH can be particularly dangerous if users believe the AI has validated their reasoning when it has merely reflected their assumptions back to them.

Measuring USCH requires attention to the gap between user belief and system capability. This gap is not always obvious because users may not articulate their beliefs explicitly. Indirect measures include observing whether users request capabilities the system lacks, whether they express surprise at limitations, whether they attribute reasoning to the system that it does not employ, and whether they adjust their interaction patterns based on false assumptions about how the system works. Direct measures involve asking users to articulate their understanding of the system's capabilities and comparing these articulations to documented specifications.

Mitigation strategies operate at multiple levels. At the system level, clear documentation and explicit boundary statements reduce the likelihood of misalignment. At the interaction level, the 1024 protocol and VCD constructs help maintain alignment as conversations progress. At the user level, education about how AI systems work and what their limitations are can improve mental model accuracy. At the organizational level, policies around AI use that emphasize verification and validation can prevent decisions based on misaligned user beliefs from causing harm.

The research agenda around USCH remains open. Questions about the relationship between user expertise and susceptibility to contextual hallucination need investigation. The role of interface design in promoting or preventing misalignment deserves attention. The interaction between USCH and other forms of user error or system failure requires clarification. The effectiveness of different mitigation strategies across different user populations and use cases needs empirical validation. The long-term effects of repeated interaction with AI systems on user mental models warrant longitudinal study.

Ultimately, USCH is a framework for understanding a fundamental challenge in human-AI interaction: the difficulty of maintaining shared understanding when one party has access to its own internal states and the other does not. Users cannot see how the AI works, what it knows, or what it is uncertain about. They must infer these things from behavior, and inference is always vulnerable to error. The framework does not solve this problem, but it provides language and structure for recognizing when it occurs and for implementing defenses that reduce its severity.